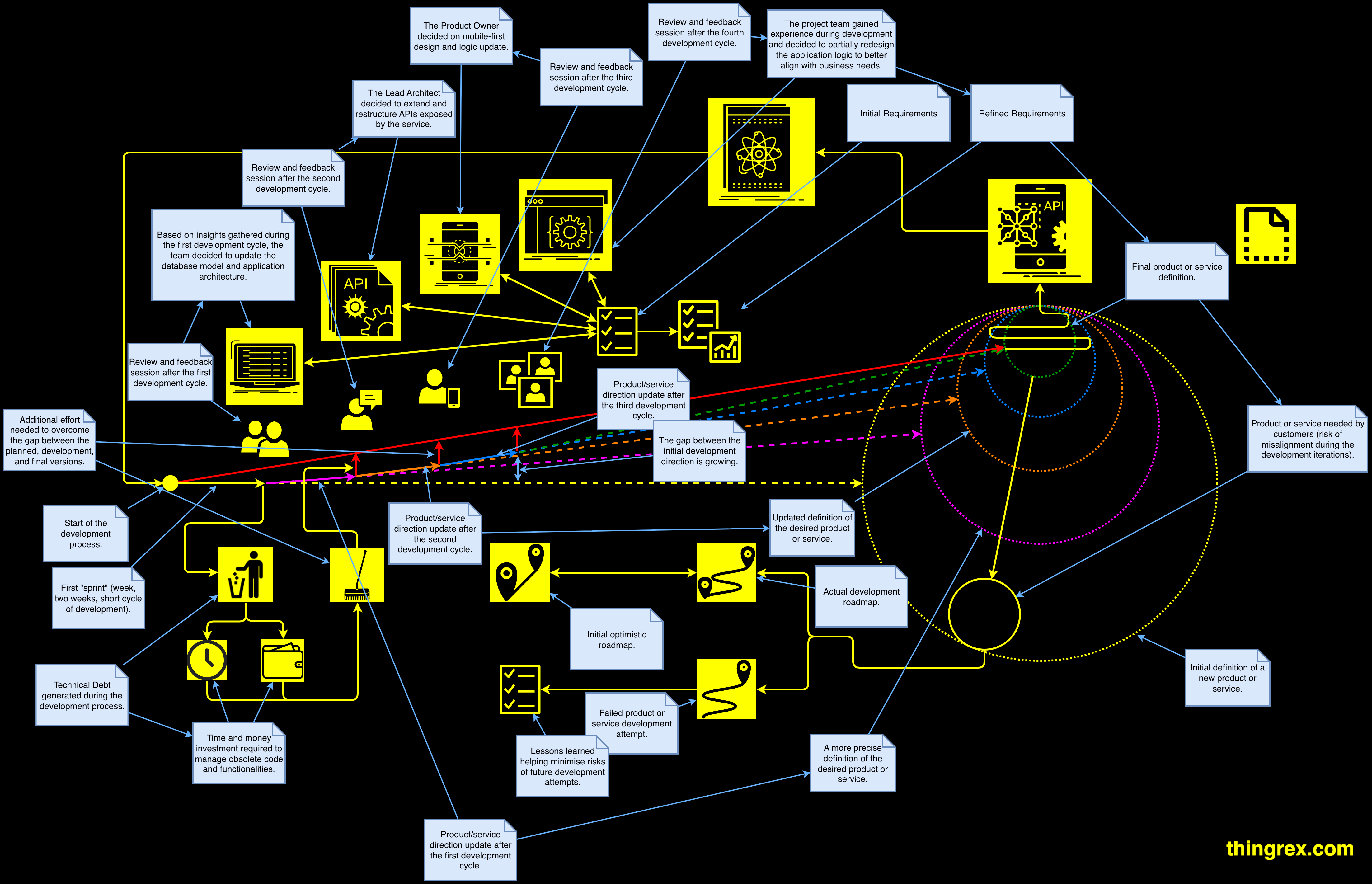

This diagram illustrates a pattern I see in hardware-enabled SaaS initiatives. You start with initial requirements and an (optimistic) roadmap.

Then reality shows up:

❌ The field data disagrees.

❌ Ops workflows change.

❌ Customers/stakeholders ask for “just one more adjustment."

❌ The team constantly refactors APIs, reshapes the data model, rewrites logic, etc.

Each iteration does improve the product…

…but it also creates iterative debt: leftover code paths, half-migrated schemas, compatibility layers, rushed fixes, duplicated logic.

The bottom line: the project direction moves faster than your business can verify it. So the “gap” grows:

- gap between planned vs actual roadmap

- gap between what you built vs what customers need

- gap between engineering reality vs business strategy

And you start paying for it twice:

- You pay to build the next version

- You pay again to adjust the old versions to the new requirements

In generic SaaS, this is annoying. In the industrial world, this results in:

- downtime in operations

- failed device updates

- exploding cloud bills

- vendors “shipping features” while you absorb the risk

The Solution:

What I do as a Fractional CTO in these situations is not “more agile.” I introduce governance for iterations:

✅ Decision checkpoints: after each cycle, what changed in requirements, and why?

✅ Architecture runway: what must be stabilized before the next sprint?

✅ Vendor accountability: who owns the cost of rework?

✅ Operational fit: will it work when connectivity is bad and humans are stressed?

Remember: iteration should reduce uncertainty. If it increases entropy, your process is broken.

👉 Use Q to email me “CHECK” and I’ll send you the 10-Question Reality Check I use to audit sprints.